This paper addresses the problem of translating night-time thermal infrared images, which are the most adopted image modalities to analyze night-time scenes, to daytime color images (NTIT2DC), which provide better perceptions of objects. We introduce a novel model that focuses on enhancing the quality of the target generation without merely colorizing it.

The proposed structural aware (StawGAN) enables the translation of better-shaped and high-definition objects in the target domain. We test our model on aerial images of the DroneVeichle dataset containing RGB-IR paired images. The proposed approach produces a more accurate translation with respect to other state-of-the-art image translation models.

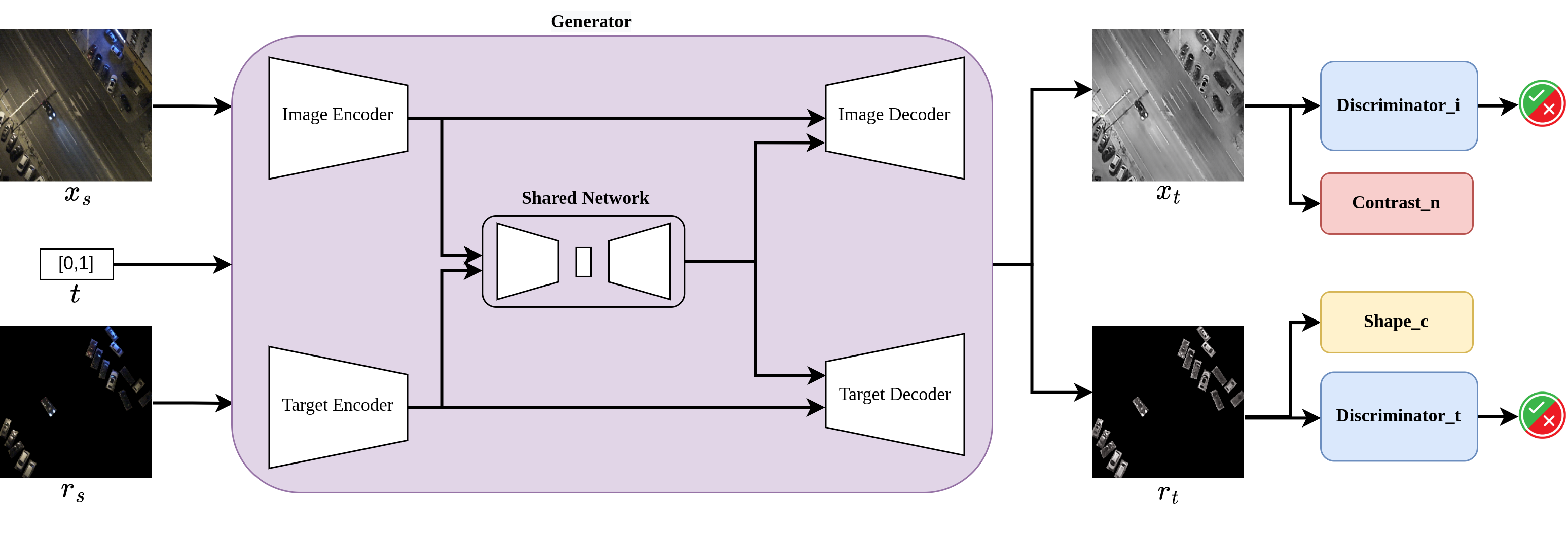

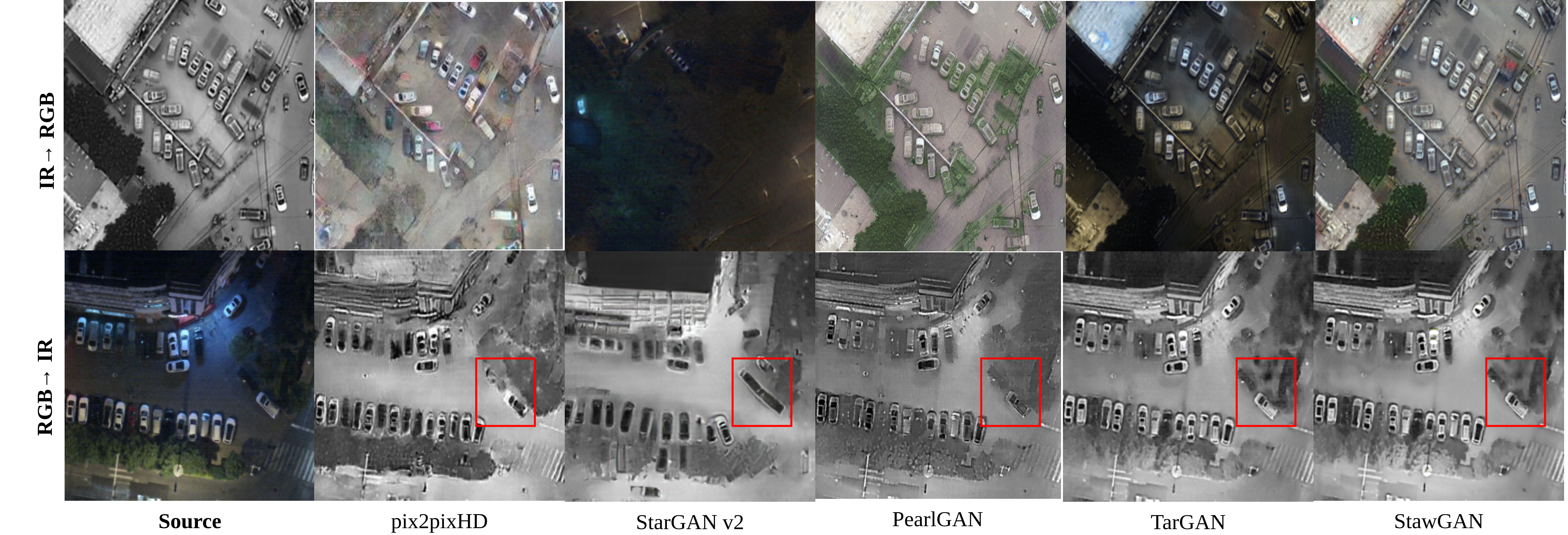

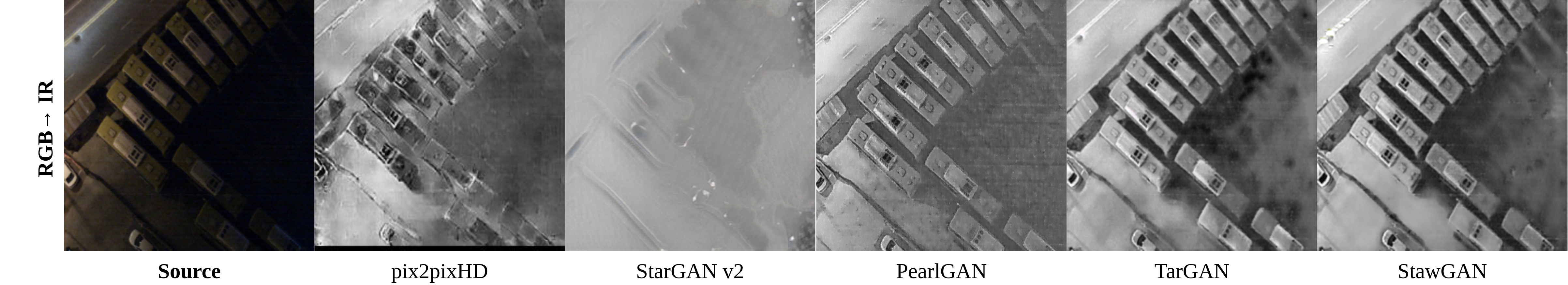

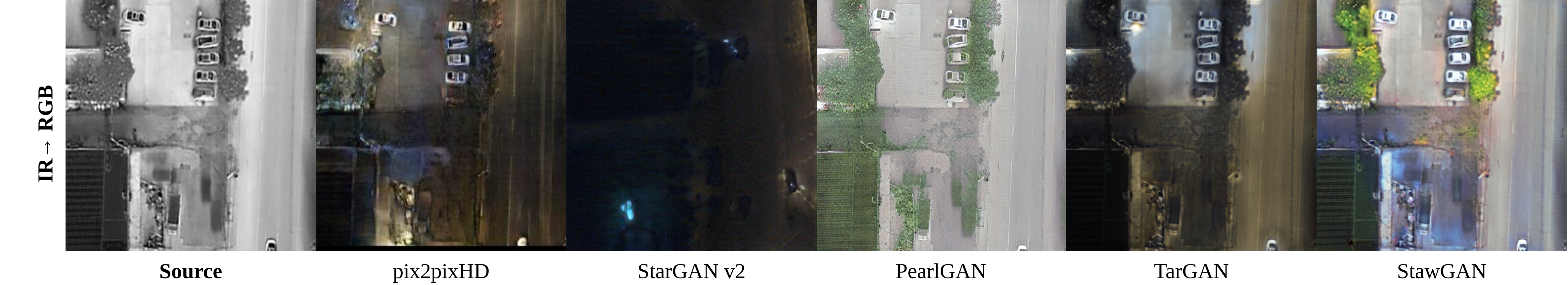

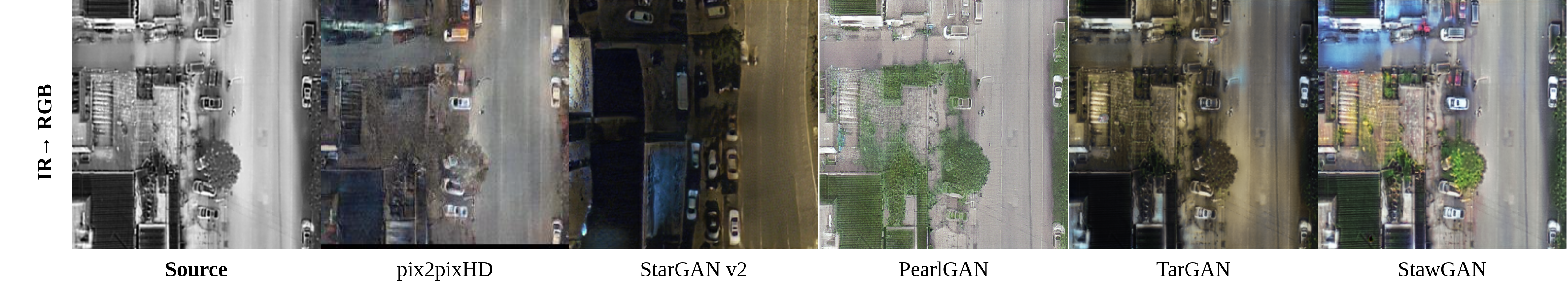

We compare our method with different models that reached state-of-the-art performance on other datasets. We test pix2pixHD used for unpaired image-to-image translation, StarGAN v2 interesting for the characteristics of extracting the style code from the scene and not injecting it with a fixed label, PearlGAN introduced for this specific task of translation from IR to RGB and finally the original version of TarGAN. shows the comparison on randomly chosen samples.

For the translation IR → RGB the results of PearlGAN are visually poor considering because of the green color of the buildings, supposing that it interprets them as vegetation. Regarding the translation RGB → IR the results of StarGAN v2 are slightly blurred, mostly on the targets, PearlGAN does not translate correctly all the cars, and some of them are ghosted. TarGAN and pix2pixHD samples are visually comparable to our results even though our method produces more realistic colors, but still, if we focus on the red boxes, we notice a concrete difference. From RGB → IR pix2pixHD loses the details of the target car, while TarGAN shows black holes and paler.

For the opposite translation, both the color and the shape of the StawGAN image are more appealing with respect to pix2pixHD. Furthefrmore, TarGAN loses information on the targets, since the image is darker, making it difficult to distinguish the shape and the type of vehicle. In the end, the proposed StawGAN produces high-definition samples with realistic colorization and well-shaped and defined targets. Table reports objective metrics results for the image modality translation task. From this analysis, we can confirm that the FID of pix2pixHD is higher with respect to our approach that scores the second-best value, but our method surpasses it in all the other metrics.

| Model | FID | IS | PSNR | SSIM |

| pix2pixHD | 0.0259 | 4.2223 | 11.2101 | 0.2125 |

| StarGAN v2 | 0.4476 | 2.7190 | 11.2211 | 0.2297 |

| PearlGAN | 0.0743 | 3.9441 | 10.8925 | 0.2046 |

| TarGAN | 0.1177 | 3.4285 | 11.7085 | 0.2382 |

| StawGAN | 0.0119 | 3.5163 | 11.8251 | 0.2453 |

We perform also a secondary analysis on the segmentation capability of the proposed model with respect to the TarGAN, which is the only model from the one we compare that performs segmentation too. The comparison shows that our model gains better performance with respect to the TarGAN. Since segmented images have in both domains large black zones, the translation task results easier to be accomplished. Therefore, the segmentation metrics are evaluating mainly the generation of concrete target shapes. We report objective metrics results for this task.

| Model | DSC | S-Score | MAE |

| TarGAN | 79.34 | 85.08 | 0.0115 |

| StawGAN | 84.27 | 88.61 | 0.0081 |

@ARTICLE{,

author={Sigillo, Luigi and Grassucci, Eleonora and Comminiello, Danilo},

journal={2023 IEEE International Symposium on Circuits and Systems (ISCAS)},

title={StawGAN: Structural-Aware Generative Adversarial Networks for Infrared Image Translation.},

year={2023},

volume={},

number={},

pages={},

doi={}

}